10 key takeaways from EU DisinfoLab’s 2023 conference

Last week, for the third year in a row, we had the pleasure to attend the EU Disinfolab’s 2023 conference. Leaving Brussels for the beautiful city of Krakow in Poland, the event remained a must to anyone looking to stay ahead of the game in the fight against disinformation.

We are very proud that three Check First projects were referenced during the conference. We also were given the floor to talk about Facebook Hustles, our latest investigation. What stuck with us? Here are 10 thoughts we gathered on the way home.

1. Disinformation has real-life consequences.

It may sound obvious, but disinformation has the power to change (or even worse) destroy lives. We had the chance to hear powerful testimonies such as the opening talk by Brent Lee, a recovering conspiracist who hosts the podcast “Some dare call it conspiracy”. He told the audience about how he went down the rabbit hole and got more and more isolated “No one left me […] I left them”. The moment when someone was shot in the neck at the Capitol is the moment Brent chose to speak out. “It scared me that it had become a real life event”. The turning point in Brent’s journey was the fact that he got out of his locked state of mind through sheer logic: “When Trump got elected and then Brexit happened, this didn’t make sense to me. If there were a global conspiracy, it didn’t match the objectives of a great plan to control us all”. And slowly, Brent realised he’d been misled and decided to tell his story to the largest possible audience to help people get back in touch with reality.

Speaking about real-life events, some testimonies left us less hopeful: Emma Le Mesurier told about how she is still fighting to resist the lies which followed her husband James’s death as well as the challenges of her journey in restoring the truth. We also heard about how Fox News “irrevocably changed” Nina Jancowicz’s life, and how she’s fighting back. Imran Ahmed (Center for Countering Digital Hate) delivered a powerful ending keynote on how to keep the faith and continue to fight for the truth as a right.

2. Fighting disinformation can have mental health consequences we need to be aware of.

Our community is regularly exposed to violent content, online harassment, physical threats, lawsuits, or other types of “occupational hazards” such as burnouts. We need to build a stronger mental health support system to keep our work sustainable. Jochen Spangenberg (Deutsche Welle) encouraged newsrooms managers to provide their staff with experts (psychologists) on call 24/7 and establish clear guidelines. Among other hot topics during the conference was how vicarious trauma can effect OSINT people (on that topic, the excellent “How to Maintain Mental Hygiene as an Open Source Researcher” from Bellingcat is a must-read).

3. The field of countering disinformation is empowered by a big sense of community that can accomplish a lot

Disinformation has become a big concern, particularly since Covid; initiatives are flourishing all over the world trying to tackle disinformation in every possible way. This is a small world — as years pass, faces become more and more familiar in the EU DisinfoLab conference room— but it is growing rapidly: we see more and more new people each time, at every event. The example of VOST Europe, or how disinformation on WhatsApp was identified and debunked by Portuguese volunteers is an example of how citizens are joining forces to tackle disinformation.

4. At the same time, all these initiatives seek to establish working frameworks and get organised

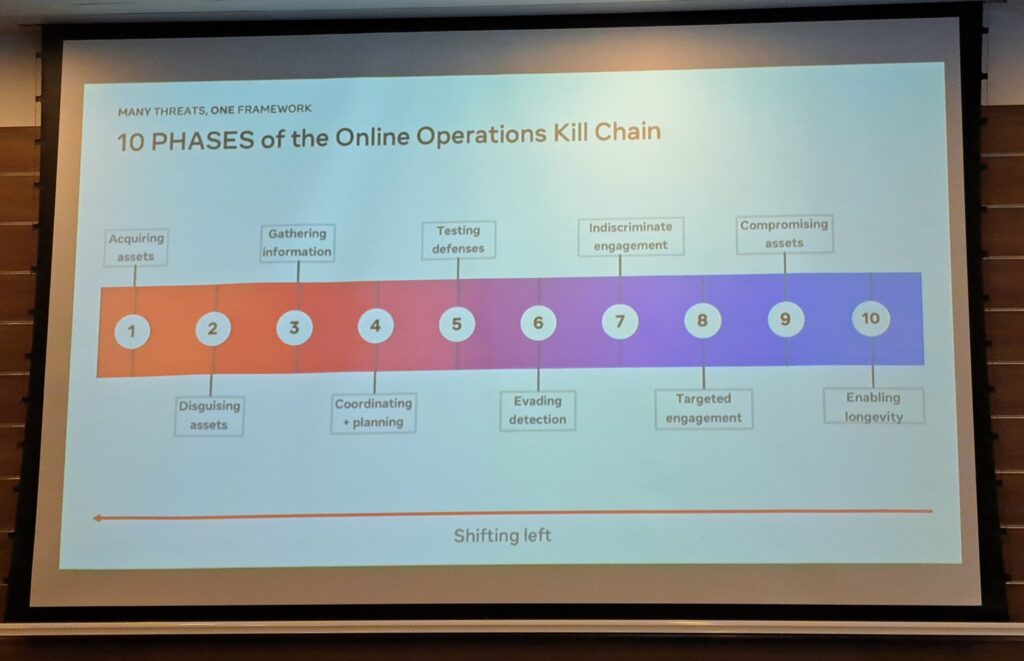

A particularly pragmatic segment of this conference was the ‘how-to’ section. This delved into the ways in which Information Operations experts at Meta discern phases of an online operation, showcasing their ‘Kill Chain‘ procedure.

It also covered the manner in which one should conduct OSINT investigations, adhering to ObsInt guidelines. Additionally, there was guidance on preserving the information individuals gather to maintain a record of content that might later be excised from the Internet. Advice was also provided on maintaining morale during more trying times. These exceptionally inspirational methodologies, concepts, and frameworks are invaluable for shaping future investigations and enhancing everyone’s working approach. It afforded a splendid chance to return to the office full of fresh perspectives and approaches to work.

5. Since we all face similar problems, we develop similar solutions. Let’s open-source and work together!

This is an important out-take of this year’s conference. As Kalina Bontcheva (Univesity of Sheffield) underlined, “there is a need for us here to work as a community. The tools we build are similar to those the others in the community build because we all deal with the same issue. If we can do it with open source tools, it can benefit the entire community”

Under the DSA there are seventeen Very Large Online Platforms (VLOPs), and two Very Large Online Search Engines as well as plenty of smaller, yet important platforms where disinformation spreads. Lots of us are building tools to monitor content on these different platforms, many of whom sharing an appeal to open-source them and collaborate so that we don’t duplicate each other’s work and can better allocate our resources and time. CheckFirst didn’t need to be reminded of this pressing need: we opened-sourced the scrapers we used during the CrossOver project to confront data with the information provided by platform’s official APIs; we also build Tikadrchivist, an unofficial TikTok Ads Library API Search Tool that helps researchers navigate the TikTok’s Ads Library with a host of customisable search parameters such as queries, regions, and sorting options.

6. Speaking of monitoring, we need a little help from platforms on data accessibility.

Marc Faddoul (AI Forensics) reminded that although platforms like Tiktok are giving some researchers access to their API, the platform’s researchers API terms of service forces users to submit their project for approval. “If you tell the person you are going to watch that you are going to watch them and on which points, it raises ethical questions”, Marc added. We worked on that topic with the Mozilla Foundation earlier this year.

7. Transparency is no silver bullet, we need accountability for what we highlight.

As John Albert (Algorithm Watch) underlined in his speech “companies have been backsliding on particular commitments to transparency”. Brandi Geurkink (Mozilla) also reminded the audience that “the field of disinformation research is being weaponised by several actors serving a political agenda. The aim [of reducing access to data] is to cut off the link with researchers sharing findings with governments which is how they can make informed decisions”.

We’ve been repeating this: we need platforms to take action on problematic content when a disinformation campaign is reported. A promise of future action when cornered at a conference is not enough. Doppelganger is still going on, as well as Facebook Hustles and so are their effects on democracy and user safety. After our fruitful discussions in Krakow, we are looking forward to hearing from platforms about actual measures put in place to moderate this type of content, and we would be happy to give a hand if needed.

8. Legislations are being drafted, agreed and voted upon. That’s exciting! Let’s enforce them now.

From last year’s conference, many remembered Commissioner Vera Jourova’s stance on the media exemption before it was removed from the DSA draft. She had qualified it as an idea to be put “in the box of good intentions leading to hell”. In October 2023, the media exemption was indeed taken out of the DSA but is coming back as the European Media Freedom Act is being negotiated. The sentiment in the room did not change: the media exemption shall not pass, at the risk to empower bad actors to use it to spread disinformation.

The upcoming AI Act was also in many attendees and panelists minds, stressing that robust regulation in needed to curtail potential harm as we’re witnessing a surge in AI generated disinformation content.

Of course, the DSA was omnipresent on stage and during coffee-break conversations. As Gregory Rhode (EU DisinfoLab Board) summarised: “Last year we were using the future tense to speak about the DSA, now it’s here and we’re all waiting to see how it will be enforced”. In that context, it wasn’t surprising to come across many already designated national Digital Services Coordinators (DSC) and anticipated ones as they will be in charge of a great part of the DSA’s enforcement. As added salt to the conference, the Breton/Musk confrontation about social media discourse about the Middle-East events took place right before the beginning of the conference. This wasn’t the most comfortable time for European Commission representatives to be on stage, but allowed the room to learn that the EC is monitoring the situation closely, “collecting data”.

9. It’s the attention economy, stupid.

In the digital Wild West, users attention is golden. In the war for screen time, disinformation actors use psychology as their shield and algorithmic manipulation as their slingshot. As Emerson Brooking (DFR Lab) stated in his talk “What Russia-Ukraine teaches us about attention and warfare”: attention has a half-life, demands novelty, and novelty is not reality. “This makes the Internet crucial to understand conflicts but also deeply unreliable”. In the context of the invasion of Ukraine by Russia, support for Ukraine is directly related to public opinion.”Ukraine needs attention more“, said Emerson and because of Russia “there’s been a spread of apathy and the public’s disengagement“.

Speaking of the attention economy, Nandini Jammi (Check My Ads), Clare Melford (GDI) and Sam Jeffers (Who Targets Me) all pointed out interesting ways to act on the attention economy stack — where we learn, for example, that “9 out of 10 accounts that use Google ads are confidential, we don’t know who is behind them, Google does.” Not to forget our very own presentation of Facebook Hustles, showing how scammers abuse both Meta and its users, on top of renowned media brands, to lure users into money pumping schemes.

10. When in the eye of the storm, taking a step back is always a good idea.

As crucial as they are, it is sometimes necessary to take a break from regulations, tools and applications by taking a step back on what the community is facing.

Taking a bird’s eye view on the questions affecting all of us, Theophile Lenoir (University of Milan) reminded us how “delving into the science war debate can help us face tomorrow’s challenges. There is a history of how the quality of information is defined, and this definition serves a purpose”.

This is a question of rights, of objectivity as a universal right.

Kudos to EU DisinfoLab for having put together an essential conference, and many thanks to their team for making it work as a Swiss clock. As always, the organisers took special care to tell a story during the event, pacing the sessions like the scenes of a good movie. From a strong opening rooted in the real word thanks to Brent Lee, to an invigorating closing speech by Imram Ahmed, attendees had a unique opportunity to learn, share and network. Thanks for solidifying the community.