CheckFirst and Faktabaari present a new study showing Finnish far-right videos highly recommended by YouTube during the presidential race

CheckFirst and Faktabaari present their first report on the CrossOver Finland project—a significant milestone.

Explore concerning trends in Youtube’s search and recommendation algorithms in the context of the 2024 Finnish Presidential election campaign. More recommendations for the far-right and right-wing candidates and a funnelling effect, steering users to a limited set of channels.

Previous research shows that two thirds of the Finnish population is using YouTube frequently. In the context of the 2024 Finnish presidential election this past February, CheckFirst and fact-checking and media literacy NGO Faktabaari collaborated to investigate the impact of YouTube’s recommendation system on users. Search queries were made on the platform, using a list of 77 keywords curated by Faktabaari and Checkfirst, ranging from the elections candidates names and their political party to topical campaign themes. Search results and recommendations were stored daily and analysed. Our findings demonstrate the existence of a “funnelling effect” in search and recommendation results, meaning that users engaged in a diverse range of political searches were directed by YouTube’s recommendation system towards a limited set of video channels. Out of 77 tracked search terms, 9 Youtube channels find themselves systematically in the recommendations 85% of the time.This narrowing of the user’s choice options left them exposed to a concentrated selection of content providers, potentially influencing their perspectives.

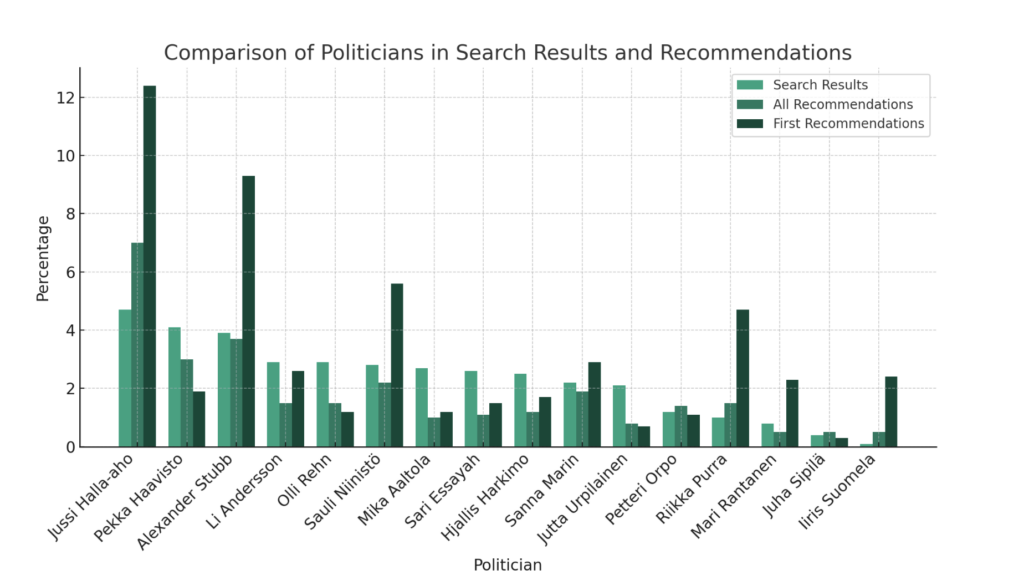

Far-right and right-wing candidates over-represented in recommendations

We also found out the significant overrepresentation of right-wing voices in YouTube’s video recommendations during the Finnish presidential election. The far-right Finns Party and its candidates, were disproportionately promoted compared to others in the search results. A staggering 19.3% of the first recommendations for users exploring political videos were videos featuring politicians from the Finns Party, hinting at a potential bias in the algorithm. This is important, as the “first recommended video” plays automatically after the end of the currently watched video. Alexander Stubb, the centre-right National Coalition candidate was present in 9,3% of first recommendations. By comparison, the first candidate from the left being present in the recommendations was the Green Leagues’s Iiris Suomela, present in less than 3% of first recommendations. However, almost all of the first recommendations in question are a single video where Suomela debates Riikka Purra, the current leader of the Finns Party.

Our findings suggest that YouTube’s recommendation system could be seen as biassed and, instead, amplifies a narrow spectrum of political content with a particular leaning. This could raise concerns when it comes to the platform’s role in shaping public opinion, especially in the context of a democratic election where diverse perspectives are crucial.

For Guillaume Kuster, CEO of CheckFirst, “Our findings show the continuous necessity to independently monitor algorithmic recommendations. We welcome the recently adopted European Digital Services Act, enabling unparalleled scrutiny on big platforms and their effect on societies. However, adversarial testing exemplified by initiatives like CrossOver remains a vital instrument to identify risks and problematic behaviour in these automated systems. This approach is essential to better inform citizens, platforms and regulators alike, enabling them to find solutions together to build a better, fairer internet particularly in the context of elections‘ ‘.

The asymmetry of recommendations may be an unintentional consequence of the algorithm’s goal of keeping users on YouTube, says Faktabaari researcher Aleksi Knuutila. “YouTube should change the way its recommendation system works so that it does not narrow the range of perspectives offered to viewers.”

How to enhance the situation? Our recommendations

Our research proposes potential solutions to mitigate algorithmic bias in YouTube recommendations. One suggested remedy is a total shift in the algorithmic logic towards prioritising diversity of viewpoints over click-driven metrics. By fostering a more inclusive algorithm, YouTube could contribute to a healthier and more informed society.

To address concerns about political bias, we recommend that platforms such as YouTube publish the risk assessments and risk mitigation measures required by the DSA as part of their obligations as Very Large Platforms. Youtube is subject to this regulation as a very large online platform (VLOP), designated as such by the European Commission as part of the 17 VLOP reaching at least 45 million monthly active users in the European union.

Faktabaari co-founder Mikko Salo emphasises the role of political content in engaging YouTube users: “Political content and debate make YouTube interesting for many users. Political videos have their place in modern political culture”.

YouTube’s influence on political opinions should be further monitored and researched. Our study in Finland reveals a gap between YouTube’s declared efforts to diversify content and the actual outcomes in smaller language groups. As the EU elections approach, this disconnect is concerning. It underscores the need for more research, and more effective measures by the platform to ensure a balanced political discourse on Youtube. As the digital landscape continues to shape public discourse, it becomes increasingly important to reflect the diversity of political discourse online and provide balanced and fair content recommendations for citizens.

Discover our dashboards available here. Read our full report here.

If you have any questions, don’t hesitate to reach out to us here!

On 01/03/2024, the authors sent a request for comment to Youtube. On the day of the publication, we have not received an answer.