Unchecked Political Ads: A Surge of Pro-Russian Propaganda on Meta’s Platforms Ahead of EU Elections

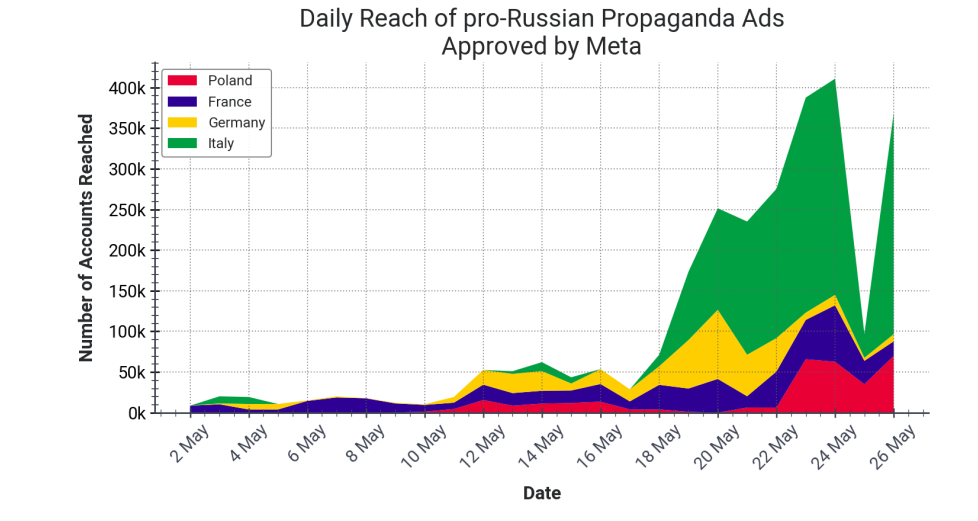

Between May 1 and May 27, 2024, Meta approved at least 275 pro-Russian propaganda ads targeting Italy, Germany, France, and Poland. These ads, lacking the required political disclaimers, reached over three million accounts across these nations.

In cooperation with AI Forensics, we detected pro-Russian ads as part of a larger, troubling trend. They reached a staggering total of 3,075,063 accounts, with 1,441,543 accounts targeted in Italy, 854,052 in France, 429,369 in Germany, and 350,099 in Poland. The timing is particularly critical as the ads appeared just weeks ahead of the EU Parliament elections, potentially influencing public opinion and voter behavior.

This issue arises in the wake of the European Commission’s March 2024 guidelines under the Digital Services Act (DSA), which aim to mitigate online risks associated with elections. These guidelines emphasize the importance of robust policies to prevent the misuse of advertising for spreading misinformation and foreign interference. Despite these directives, Meta’s advertising systems appear vulnerable to exploitation, allowing unchecked dissemination of misleading content.

Prior investigation by AI Forensic revealed that between August 2023 and March 2024, Meta allowed more than 40 million accounts in France and Germany to be exposed to similar pro-Russian propaganda. These findings and others like our Facebook Hustles investigation, prompted the European Commission to initiate formal proceedings on April 30, 2024, to investigate potential DSA violations by Meta.

The approved ads used simple obfuscation techniques to bypass Meta’s moderation systems, such as inserting hidden characters and splitting words. Despite Meta’s policies, these ads were not flagged during the initial review process. This highlights a significant gap in Meta’s content moderation efforts, suggesting that more effective and sophisticated detection methods are urgently needed.

Ads are not often coming up in conversations when discussing electoral integrity and foreign manipulation attempts. However, our work demonstrates that nefarious actors are well aware of the utility of social media ads to push they narratives. The surge of illegal ads navigating the platforms’ rules is a wake-up call for Meta and regulators to enforce the DSA more thoroughly

The dissemination of these ads, particularly in the weeks leading up to the EU elections, underscores the urgent need for more effective content moderation and stricter enforcement of advertising policies on social media platforms. The widespread reach of these ads poses significant risks to civic discourse, electoral integrity, and consumer protection in the EU.

As stakeholders in the digital space, we remain committed to highlighting these issues and advocating for stronger regulatory measures to ensure the integrity of our digital public sphere. The findings of our investigation reveal the critical need for platforms like Meta to enhance their content moderation systems and for regulatory bodies to enforce stricter oversight to protect the integrity of democratic processes.

Key Findings:

- Targeted Reach: The ads reached a total of 3,075,063 accounts.

- Italy: 61 ads reached 1,441,543 accounts.

- France: 101 ads reached 854,052 accounts.

- Germany: 75 ads reached 429,369 accounts.

- Poland: 38 ads reached 350,099 accounts.