CheckFirst joins researchers and stakeholders advocating for access to public data for public interest research

Keeping platforms accountable is at the core of Check First’s mission and values, particularly when evaluating the impact of algorithmic content recommendation on society. Recent changes in the way companies or brands like Twitter and YouTube are altering access to their data are just mere examples of how platforms are affecting civil society’s ability to hold them accountable.

On April 26th, the Mozilla Foundation and a group of civil society experts gathered in Brussels to discuss the implementation of the Digital Services Act’s public data sharing scheme in practice and suggested a list of recommendations as a response to the European Commission’s call for evidence for a Delegated Regulation on data access provided for in the Digital Services Act. CheckFirst is glad to have joined this effort.

Why is Check First adhering to this feedback

We strongly believe that an efficient application of the DSA heavily relies on the capacity of researchers and the civil society at large to be able to monitor and audit very large online and search platforms (VLOPs). As of today, access to the platforms’ data through their APIs is insufficient and often does not fully represent how content is personalised for users. The letter formulates a series of recommendations encouraging the regulators to define how and to whom VLOPs must share data necessary for independent audits.

Although API data must be widened, this would be insufficient by itself. Platforms control what data is shown through their APIs and they do not currently reflect how content is personalised for a given user. This is why Check First has been using user simulation and scraping as a complementary means to collect data and monitor what content is presented to users during the CrossOver project [link]. We hence support unequivocally the recommendation to make scraping a standard and legitimate technique to perform monitoring and auditing of the platforms’ obligations. Check First has shown that relying only on data provided by the platforms themselves is not sufficient to conduct truthful reports and analysis of what information is presented to citizens by the platforms. We also support the recommendation to enforce a standardised process to be implemented by all platforms for data access requests.

Recent events which further motivated us to sign the letter

Less and Less APIs available

With an increasing number of platforms closing their APIs to researchers and obstructing access to valuable data the implications need to be critically assessed.

Twitter, YouTube, and Reddit are prime examples of this increasing trend.

In February 2023, Twitter, after being acquired by Elon Musk, decided to limit the availability of its open API program for researchers, compelling them to pay a steep fee of $42,000 per month for access to tweets. This decision was met with consternation in the academic and research community, since Twitter data has long served as a valuable resource for studying various aspects of human behaviour, social trends, and public sentiment. The exorbitant fee effectively puts this rich dataset out of reach for many researchers, stifling progress in social sciences, data science, and digital humanities.

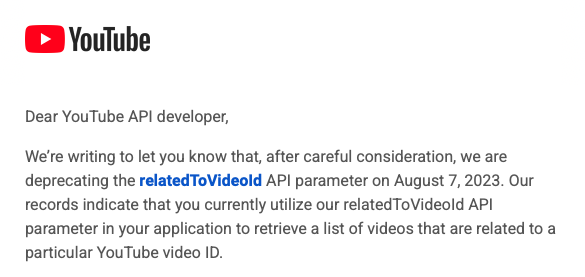

Similarly, in May, YouTube discreetly announced the depreciation of the ‘RelatedToVideoID’ parameter from its YouTube Data API to its developers. This API parameter allows researchers to extract related video information, a tool for studying video content patterns, user behaviour, and YouTube’s recommendation algorithms. Without access to this parameter, significant avenues of research are effectively blocked, limiting our understanding of YouTube’s content dynamics and their impact on users.

Lastly, Reddit, a platform known for its vibrant communities and open discussions, signalled its intention to introduce a fee for the use of its API in April. This fee applies specifically to products that do not directly contribute value back to the platform. Reddit’s decision further threatens the principles of open data and data accessibility, placing potential additional financial burdens on researchers.

These actions by Twitter, YouTube, and Reddit, while understandable from a business perspective, undermine the principle of open data, an essential cornerstone of the modern digital ecosystem. They create barriers to information access, severely limiting the ability of researchers to conduct meaningful and socially beneficial studies.

Within the DSA framework, these actions raise concerns about the growing power of digital platforms to control data access. It becomes imperative to address these issues to ensure a balance between the platforms’ legitimate business interests and the broader societal needs for data access, transparency, and accountability.

In light of these recent announcements, researchers’ contribution to the conversation becomes even more crucial. These changes will make it harder to understand how content is recommended to users and, therefore, to assess the impact of these recommendations on society. The recommendations put forth to the European Commission, including the call for a standardised data access request process, are a step in the right direction to ensure transparency and accountability.

As the landscape of digital platforms continues to evolve, so too must our approach to monitoring their activities. With the backing of the Mozilla Foundation and other civil society experts, as well as the numerous feedback received by the European Commission, the call for better access to data is growing louder. The future of our digital society depends on this, making it incumbent upon regulators to heed these feedbacks and ensure that the appropriate changes are made to keep the digital world transparent, accountable, and ultimately, in service of the public interest.